It’s been a while since I have been planning to write a blog and share the knowledge .My wife has been trying to convince me from a very long time that I should write the tech blog because she thinks it’s worth to share the details and knowledge I have gained over the period of time with the everyone.

So here you go I am writing my first blog, last but not the least I would like to say thanks to my friend Biju Kunjummen who convinced me to write this blog.

This blog is about provisioning multinode Hadoop cluster with Docker.Docker is an open platform for developers and sysadmins to build, ship, and run distributed applications.Click here if you want to read more about it.

I was struggling with creating and simulating multinode clusters on my laptop.The Virtual Machines are good but very heavy, require and consume lot’s of resources like CPU and RAM.Docker is light weight based on LXC i.e. Linux container.

Just like you use pom.xml for Maven, build.xml for Ant, build.gradle for Gradle and the list is endless , similarly for docker containers we use Dockerfile.

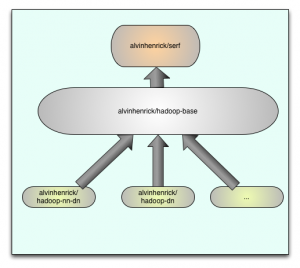

The diagram above details the way I built the containers. The first container (alvinhenrick/serf) is built from Ubuntu 13.10 (Saucy) the base image pulled from docker repository. By default /etc/hosts is readonly in docker containers. This container solves the problem of FQDN for all containers in cluster. A team at sequenceiq solved the problem via dnsmasq and serf. Serf is efficient and lightweight gossip protocol to communicate with nodes and broadcast custom event and queries.You can read more about it here .The only change I made was fork the repository and build the Ubuntu container instead of CentOS.

You can read more about Serf at http://www.serfdom.io/.

The second container (alvinhenrick/hadoop-base) is build from (alvinhenrick/serf). This container takes care of installing JAVA, SSH and HADOOP .The hadoop source code is build from scratch. Hadoop communicate within its components via RPC call and uses Google protocol buffers for exchanging messages. This container installs protoc for compilation and building the required packages. This container also setup SSH keys (password less access) required by hadoop to communicate between nodes for configuration etc.

The third container (alvinhenrick/hadoop-nn-dn) as you might have guessed contains configuration for setting up the master container which has configurations for namenode, datanode and yarn processes.

The fourth container (alvinhenrick/hadoop-dn) contains only configuration to setup slave nodes and has configurations for datanode and yarn processes.

Both master and slave containers also make use of unix utility daemon-tools to run sshd , serf and dnsmasq services in background and attach themselves to the lifecycle of docker container daemon.The only way to stop the services is to stop the container.I have only configured the slave containers this way because we can login to any slave containers anyways via ssh from master . Why to open multiple terminals when we don’t have to ?

The master container starts the hdfs and yarn services for the whole cluster.Please refer to Apache Hadoop documentation for more details.

I decided to start master container in foreground mode therefore when we startup master container it will take you to the bash shell prompt after successful start-up.

Prerequisite

- Docker must be installed on the host computer / laptop.

-

git clone https://github.com/alvinhenrick/docker-serf

- Change directory to where you cloned the above repository.

-

docker build -t alvinhenrick/serf .

The above steps will help you build the container which takes care of host name resolution between containers.

Steps to build multinode hadoop cluster

git clone https://github.com/alvinhenrick/hadoop-mutinode

Build hadoop-base container.

- Change directory to hadoop-mutinode/hadoop-base.

-

docker build -t alvinhenrick/hadoop-base .

- This will take a while to build the container go grab a cup of coffee or whatever drink you like 🙂

Build hadoop-dn Slave container (DataNode / NodeManager).

- Change directory to hadoop-mutinode/hadoop-dn.

-

docker build -t alvinhenrick/hadoop-dn .

Build hadoop-nn-dn Master container (NameNode / DataNode / Resource Manager / NodeManager).

- Change directory to hadoop-mutinode/hadoop-nn-dn.

-

docker build -t alvinhenrick/hadoop-nn-dn .

Starting containers.

- Change directory to hadoop-mutinode.

-

./start-cluster.sh

- Run the following shell script at the prompt shown below.

-

root@master:/# /usr/local/hadoop/bin/start-hadoop.sh

-

root@master:/# su - hduser

-

hduser@master:/# jps

-

hduser@master:/# hdfs dfs -ls /

Execute the following command on the host machine :

docker ps

You should see the following output :

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 7eb0809a8f95 alvinhenrick/hadoop-nn-dn:latest /bin/bash 11 minutes ago Up 11 minutes 0.0.0.0:49172->50010/tcp, 0.0.0.0:49173->50090/tcp, 0.0.0.0:49174->7946/tcp, 0.0.0.0:49175->8033/tcp, 0.0.0.0:49176->8042/tcp, 0.0.0.0:49177->8060/tcp, 0.0.0.0:49178->50075/tcp, 0.0.0.0:49179->50475/tcp, 0.0.0.0:49180->8030/tcp, 0.0.0.0:49181->22/tcp, 0.0.0.0:49182->50070/tcp, 0.0.0.0:49183->7373/tcp, 0.0.0.0:49184->8031/tcp, 0.0.0.0:49185->8040/tcp, 0.0.0.0:49186->8088/tcp, 0.0.0.0:49187->50020/tcp, 0.0.0.0:49188->50060/tcp, 0.0.0.0:49189->8032/tcp, 0.0.0.0:49190->9000/tcp master c9a425e258c5 alvinhenrick/hadoop-dn:latest /usr/bin/svscan /etc 11 minutes ago Up 11 minutes 0.0.0.0:49153->50075/tcp, 0.0.0.0:49154->50475/tcp, 0.0.0.0:49155->8030/tcp, 0.0.0.0:49156->22/tcp, 0.0.0.0:49157->50070/tcp, 0.0.0.0:49158->7373/tcp, 0.0.0.0:49159->8031/tcp, 0.0.0.0:49160->8040/tcp, 0.0.0.0:49161->8088/tcp, 0.0.0.0:49162->50020/tcp, 0.0.0.0:49163->50060/tcp, 0.0.0.0:49164->8032/tcp, 0.0.0.0:49165->9000/tcp, 0.0.0.0:49166->50010/tcp, 0.0.0.0:49167->50090/tcp, 0.0.0.0:49168->7946/tcp, 0.0.0.0:49169->8033/tcp, 0.0.0.0:49170->8042/tcp, 0.0.0.0:49171->8060/tcp slave1

You will notice the output of above command lists all the containers running.Scroll to the end you will see the name of the container master and slave1 respectively.

You will also notice under the PORTS columns values like 0.0.0.0:49172->50010/tcp.These are the port forward mapping from container to host.

You will notice in the Dockerfile I am using one command EXPOSE to expose these ports to the host machine and in start-cluster.sh I use -P option to publish those ports.

You have two ways to publish the ports -p 80:8080.This way you can manually specify the port forward mapping.

I chose second -P option instructing docker to randomly map forwarded port to the host machine.The docker start the random series from PORT 49000 and that is what you see in the above mentioned output.

The Namenode http port 50070 is mapped to 49182.It’s random assignment by docker so most likely it will be different on your machine when you start the cluster.

You can access Namenode UI like this http://localhost:49182.This is only applicable if your host machine is Linux machine.If you are using boot2docker on Mac OS X then retrieve the IP address of the docker machine via following command.

boot2docker ip

Like on my machine it is 192.168.59.103 so the Namenode URL on my machine is http://192.168.59.103:49182

Please read the docker documentation for more details.

To start and stop cluster you will need following commands.

#To stop containers docker stop master docker stop slave1 #To rm containers docker rm master docker rm slave1

Adding more slave to the cluster is very simple.We just need to modify two files and steps provided below.

- slaves hadoop configuration file inside hadoop-mutinode/hadoop-nn-dn/config directory.

- Rebuild the Master docker container.

- Modify start-cluster.sh as show below.

#!/bin/bash

###slave1

## -d option runs the container in daemon mode slave1

docker run -d -t --dns 127.0.0.1 -e NODE_TYPE=s -P --name slave1 -h slave1.mycorp.kom alvinhenrick/hadoop-dn

##Capture the IP address assigned to first container by docker

FIRST_IP=$(docker inspect --format="{{.NetworkSettings.IPAddress}}" slave1)

## -d option runs the container in daemon mode slave2

## Make sure you make the entry for slave2 in hadoop slave config file on the master node and rebuild the image as mentioned in blog.

docker run -d -t --dns 127.0.0.1 -e NODE_TYPE=s -e JOIN_IP=$FIRST_IP -P --name slave2 -h slave2.mycorp.kom alvinhenrick/hadoop-dn

###master

## -i option runs the container in interactive mode

docker run -i -t --dns 127.0.0.1 -e NODE_TYPE=m -e JOIN_IP=$FIRST_IP -P --name master -h master.mycorp.kom alvinhenrick/hadoop-nn-dn

Please feel free to suggest and provide feedback.

Have a wonderful read!!!.